Meta recently rolled out a new creative testing feature that allows advertisers to A/B test individual ads.

This feature allows you to prevent Meta from optimizing delivery to specific ads so that you can get a clearer idea of how each ad in the test will perform while given similar ad spend. Since it’s an A/B test, there won’t be overlap between the ads (each person will only see one of the ads).

I recently gave it a spin. In this post, I’ll cover the following:

- How the creative testing feature works

- My experience with it

- A few complaints about how it could be better

- Creative testing approaches

- Best practices and recommendations

Let’s get to it…

How it Works

You can initiate a creative test from an existing or draft campaign. There are two important requirements:

1. Daily Budget. This feature does not support lifetime budgets.

2. Highest Volume Bid Strategy. Cannot use Cost Per Result Goal, Bid Cap, or ROAS Goal bid strategies.

When editing or creating the ad, scroll down to Creative Testing (it should be immediately after the Ad Creative section).

Click “Set Up Test.” It should bring up a view that looks like this…

1. Select the number of ads you want to test.

You can create between two and five test ads. This will generate duplicates of the current ad you’re editing, which will be used in the test. The current ad will not be part of the test.

2. Set your test budget.

This is the amount of your overall campaign or ad set budget that will be set aside for this test. Meta recommends dedicating no more than 20% of your budget to this test to reduce the impact it will have on the existing ads.

If your campaign or ad set doesn’t include any other ads, more of your budget will be used for the test.

3. Define how long the test should run.

This seems to be 7 days by default, but I haven’t seen any other guidance from Meta on this. Consider the budget used, expected Cost Per Result, and overall volume that will be generated to make your test results meaningful.

4. Define the metric that will compare results.

Cost Per Result is the logical selection for most cases, but there are options for CPC, CPM, and cost per any standard event, custom event, or custom conversion.

5. Click confirm.

You’re only confirming the settings at this point. You aren’t publishing the ads.

6. Edit the ads.

Since you only created ad duplicates, you will need to edit your ads before publishing them.

Make sure that the differences between the ads are significant, rather than subtle text or creative variations. Otherwise, it’s more likely that any variance in results could be attributed to randomness. Consider different formats, creative themes, customer personas, and messaging angles.

Also note that the ad that you initiated this test from is not included in the test. It will either be active or in draft. If it’s in draft, you may decide to delete that ad before publishing.

7. Publish your ads.

When you’ve finished editing the ads that will be used in your test, publish them.

Meta should distribute similar ad dollars to each ad in the test. Note that you may see differences on the first day, depending on when each ad passed through review, approval, and preparing stages. If one ad is active before the others, it will receive the initial budget.

During the test, you can hover over the beaker icon next to an ad name to get top-level results.

8. After the test.

When the test has completed, delivery of your ads will continue. But the test is complete, so Meta will not be focused on maintaining similar ad spend across your ads. You also shouldn’t expect that Meta will automatically spend the most on the ad that performed the best in your test.

You can get the full test results in the Experiments section.

I’ll share more on this related to my test below.

My Test

I created a test of five ads that focused primarily on format differences:

- Flexible Format (9 images)

- Flexible Format (9 videos)

- Carousel (9 images)

- Single image/video ad (image or video by placement)

- Carousel promoting two lead magnets (2 images)

The performance goal was “Maximize number of conversions” where the conversion was a registration. These ads primarily promoted a free registration for The Loop, but one of the ads was a carousel that promoted both The Loop and Cornerstone Advertising Tips.

Because I used the Complete Registration event and expected a Cost Per Result in the $3-5 range, I dedicated $50 per day to this test. I ended it a little early to write this blog post.

Within the Experiments section, here is the result overview…

And a table of the results…

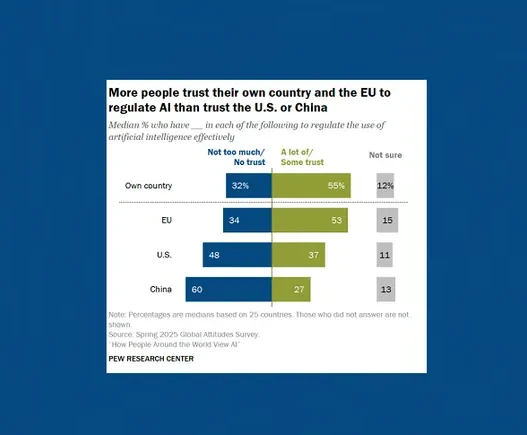

The top performer in my test was the Flexible Format version using images, and it wasn’t that close. While there will always be randomness in results, the clear lowest performer was the carousel version with two cards, each promoting a different lead magnet. It also shouldn’t be ignored that each of the top two performing versions utilized Flexible Format, with the video version costing about $1 more per registration.

Meta also offers a visual breakdown by age…

And gender…

Functionality Complaints

My primary complaint about the creative testing feature is that you can’t test existing ads. You have to duplicate an existing (active or draft) ad, and the duplicates will be part of the test. It’s especially confusing that the ad the test was initiated from isn’t included.

But it’s not just a matter of confusion. Not being able to test existing ads also limits the feature’s value. If you have five ads in an active ad set, you may question why Meta is distributing a high percentage of your budget to one ad and a low percentage to another. A test consisting of these five ads to confirm that distribution would help answer these questions. But you have to create new ads.

And that would mean either adding duplicate ads to an existing ad set or creating a separate ad set for testing. If you’re going to replicate what you already have running, the separate ad set would make the most sense. But it would conceivably be a whole lot easier to keep the current ad set and simply initiate a test of those existing ads.

Because you have to generate duplicates that you then need to edit (you can’t individually duplicate each ad that you want in the test), it can also be a whole lot of extra work. In my example, I used versions of Flexible Format and carousels that each included nine creative variations. That’s a major pain to have to do all of that over again.

I hope that this is just an early version of Creative Testing and that we’ll get the ability to test existing ads eventually.

Creative Testing Approaches

There are a couple of ways to approach creative testing with this feature…

1. Test existing ad copy and creative combinations.

Because you’re generating duplicates, this becomes messy quickly. If you are going to test existing ad copy and creative combinations, I encourage you to create a separate ad set for this purpose. I’d also recommend using Advantage+ Campaign Budget in that case.

That’s actually what I did in my example above. I wasn’t testing new ad copy and creative combinations. I created a new ad set in the existing campaign that only included the test versions of those ads.

2. Test completely new ad copy and creative combinations.

This is actually where the feature could be very useful in its current form. It could alter how you approach ad set creation from scratch. It could work like this…

When you’re ready to start a completely new campaign or ad set with fresh creatives, start with the test. Begin with up to five very diverse ideas. Use different formats, creative themes, customer profiles, and messaging angles so that you don’t end up with similar results for each of your ads.

When the test is complete, allow that first batch of ads to keep running. But learn from the results to generate a new batch of ads to test. And in this case, add those ads to the original ad set.

Using this approach, creative testing could be an ongoing process for a single ad set. You learn from each test to help inform how you develop the next batch.

Best Practices and Recommendations

I encourage you to experiment with Meta’s creative testing feature. I truly believe it’s a huge improvement over how must advertisers currently run their creative tests. But I have a few recommendations on how to get the most out of them…

1. Use levers to generate meaningful results.

Consider your expected Cost Per Action when determining the test budget, length of your test, and number of ads to be tested. I would always start with the old rule of thumb of 50 optimized events in a week. If you’re not able to get that, your results are less meaningful. You want results that are so clear that if you ran the test again, you’d get similar results.

Even in my test above, I’d likely run it for a longer window next time. I’d love to see the highest performing ad get closer to 50 conversions by itself.

2. Test meaningfully different ads.

If the ads in your test only have subtle differences in text or creative, or if they all utilize the same format, you aren’t likely to get meaningful results. Lean into Meta’s request for creative diversification in the era of Andromeda. The more different these ads are, the more meaningful your results will be.

3. Don’t micromanage your test results.

Once the test is completed, your ads will continue to run without the restrictions of your creative test settings. That means that Meta will no longer split your budget evenly between your ads. And it’s possible that Meta won’t distribute the most budget to the highest performing ad from your test (or the least budget to the lowest performer).

This is also true if you test existing combinations of ad copy and creative. You may see that the top performer is different from what Meta has been favoring.

Resist the urge to overreact to these results, particularly if they represent a small sample size. I fully expect advertisers to get upset about this one because they assume that what happened in a test is predictable for the future. And if Meta doesn’t distribute budget in a way that’s consistent with the test results, it could create frustration.

4. Test and learn.

Your main goal of using the creative testing feature shouldn’t be to fix how Meta is distributing your budget. Instead, it’s to learn from results while budget is distributed evenly.

What performed well? What didn’t? Apply these lessons to your next batch of ads.

Your Turn

Have you experimented with this tool yet? What do you think?

Let me know in the comments below!

The post Meta’s Creative Testing Tool: Setup, Strategy, and Results appeared first on Jon Loomer Digital.